HTTPS payload size profiler

Sniff HTTPS traffic using libpcap and estimate plaintext HTTP payload sizes from ciphertext metadata, allowing to guess or identify accessed resources.

I once attended a talk about payload-size and -timing analysis in the context of the Tor network. In

order to demonstrate similar issues regarding HTTPS, I wrote a simple

libpcap-based

sniffer as a realistic PoC scenario for an passive adversary.

It turns out that, from the encrypted payload sizes, we can derive the sizes of the corresponding plaintext HTTP requests and responses up to the byte exact. In fact one could assume this is a known issue, as it is mentioned in several related RFCs (e.g. RFC 5246), with or without suggesting possible countermeasures such as random jitter, padding, or compression. Additionally, by sniffing the SNI, the remote domain is likely to be known anyways (SNI encryption might come, though).

For profiling HTTPS sessions and thus also the underlying HTTP traffic, this tool will:

- use

libpcapto filter and selectively handle desired traffic - parse client and server handshake messages for relevant metadata

- collect timing and size statistics on encrypted payload data

- output this information for each handshake message

- in a post-processing step, coalesce the previous output into single request/response streams with estimated plaintext sizes

In especially in times of “Big Data” where traffic profiles are (very likely to be) linkable to actual browsing patterns, this information could then be used to guess or identify accessed resources.

HTTPS profiling mitigation: Proposed solution

A generic TLS-based solution would be desirable of course. However, there exist possible

workarounds on the inner HTTP layer, which are more practical to implement in the short term. For

obfuscating request sizes, an X-Pad header for random or block-based padding could be added.

Likewise, GET responses could be splitted by Range requests into fixed or randomly sized

chunks. These could then additionally be interleaved, in especially if multiple resources from the

same destination are queued. The resulting slight traffic overhead and roundtrip delay should be

acceptable. On the server-side, responses could be randomly padded as well.

All of those client-side techniques (header padding, range requests, multiplexing) are non-features or must be supported by all HTTP/1.1-aware servers. For ranges, at least the fallback to full responses should work. Server-side compatibility issues are thus very unlikely. A production-grade implementation also seems feasible, as only the client-side is to be changed and the security layer does not need to be touched at all. This could for example be done in the scope of a privacy-aware browser, a browser plugin, or an intermediate or local (plaintext) proxy.

Recording and analyzing HTTPS traffic

The main binary captures HTTPS traffic and reassembles TLS handshakes. Using a very basic parser, it then extracts information on timings, handshake- and content-types, payload lengths, hostnames, and cipher suites. Below is an example of sniffing all local traffic during a website visit:

./https_profiler eth0 "port 443" | tee capture.log

> 192.168.1.2:60103 -> 193.99.144.85:443 1452016909273385 ct: 22, len: 512

> 192.168.1.2:60103 -> 193.99.144.85:443 1452016909273385 ht: 1, len: 508, ver: 3/1, sni: www.heise.de

> 192.168.1.2:60104 -> 193.99.144.85:443 1452016909283443 ct: 22, len: 512

> 192.168.1.2:60104 -> 193.99.144.85:443 1452016909283443 ht: 1, len: 508, ver: 3/1, sni: www.heise.de

> 192.168.1.2:34732 -> 193.99.144.87:443 1452016909286598 ct: 22, len: 512

> 192.168.1.2:34732 -> 193.99.144.87:443 1452016909286598 ht: 1, len: 508, ver: 3/1, sni: 1.f.ix.de

[…]

> 193.99.144.87:443 -> 192.168.1.2:34732 1452016909309139 ct: 22, len: 87

> 193.99.144.87:443 -> 192.168.1.2:34732 1452016909309139 ht: 2, len: 83, ver: 3/3, cs: c030, comp: 00

> 193.99.144.87:443 -> 192.168.1.2:34732 1452016909309139 ct: 20, len: 1

[…]

> 193.99.144.87:443 -> 192.168.1.2:34732 1452016909331300 ct: 22, len: 40

> 192.168.1.2:34732 -> 193.99.144.87:443 1452016909331432 ct: 20, len: 1

> 192.168.1.2:34732 -> 193.99.144.87:443 1452016909331432 ct: 22, len: 40

[…]

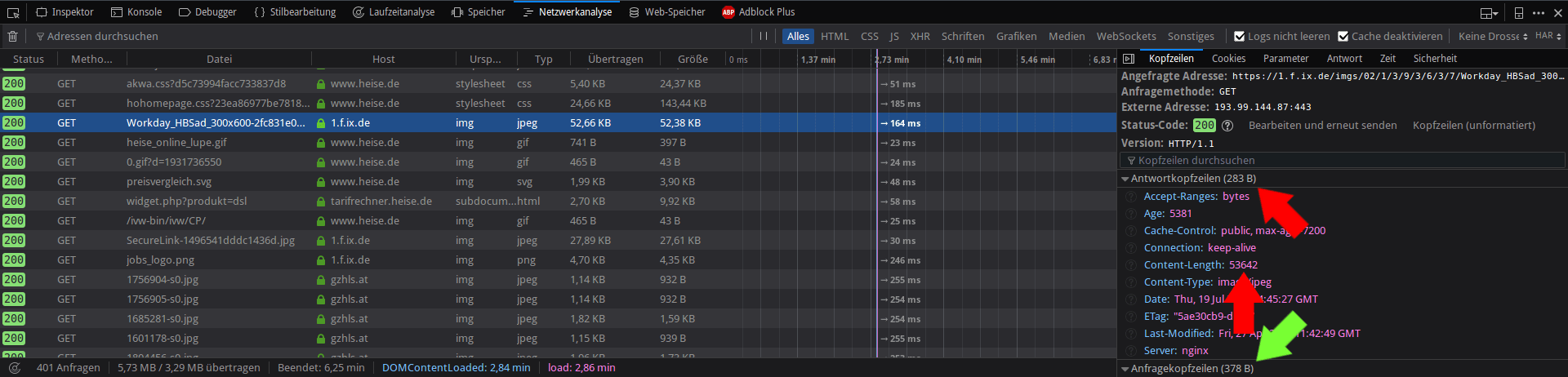

> 192.168.1.2:34732 -> 193.99.144.87:443 1452016909656534 ct: 23, len: 402 <-- see screenshot below…

> 193.99.144.87:443 -> 192.168.1.2:34732 1452016909679980 ct: 23, len: 1472

> 193.99.144.87:443 -> 192.168.1.2:34732 1452016909680896 ct: 23, len: 1472

> 193.99.144.87:443 -> 192.168.1.2:34732 1452016909681090 ct: 23, len: 1472

[…]

In a postprocessing step, this output can then be used to estimate the respective plaintext sizes by merging presumed request/response payloads. The columns for request and response lengths show the plaintext lengths according to ciphertext length sums and the used cipher suite:

./cap2reqs.py < capture.log

# req_t | res_t | end_t | req_len | res_len | sni

0 | 23506 | 50483 | 375 | 49114 | www.heise.de

221923 | 246958 | 268828 | 394 | 1741 | www.heise.de

[…]

337063 | 360509 | 388918 | 378 | 53925 | 1.f.ix.de <-- see screenshot below

[…]

716392 | 752257 | 752257 | 335 | 1167 | gzhls.at

720929 | 825303 | 825303 | 331 | 655 | www.heise.de

[…]

The timestamp columns for the request, response-start, and response-end could for example be used to generate a flowchart or to determine possible resource dependencies.

As promised, the derived lengths do indeed match the actual HTTP plaintext sizes:

Currently, only the ECDHE-(ECDSA|RSA)-AES(128|256)-GCM-SHA(256|384) cipher suites are supported

(this can be easily augmented, though). These modern ciphers are considered secure

(even do provide Perfect Forward Secrecy)

and are widely used as default.

Refining results by dependency graphs

If we assume some estimation error (due to the caveats mentioned below) and possible size collisions, one might not be able to tell individual resources apart with sufficient certainty. However, usually there won’t be only a single request/response available: Due to links, embedded images, and other resources such as css/js (which are most probably common though), we could form a dependency graph that – compared to the actually measured path – would allow to refine the certainty step by step.

This additional post-processing step requires the site’s contents to be publicly available either in advance by a precomputed sitemap or on-demand by selective crawling. So one of the main concerns regarding this kind of HTTPS “fingerprinting” should be wrt. publicly accessible resources with “mixed” content, e.g., forums, pr0n, Wikipedia, etc. Using edge probabilities and weights on this graph should be straight-forward and can possibly combined with for example training a neural network for automation.

If there is no plaintext data or resource dependency graph available, browsing pattern correlation methods could still reveal sensitive information.

Caveats and imprecisions of statistical analysis

Apart from missing support for correlating a whole session to a given weighted dependency graph, there are a lot of other possible improvements left to do. Please note that this tool has been written as a PoC for future experiments in only a couple of hours and thus should not be considered especially useful, feature-complete, or reliable.

Additionally, there are several HTTP/S properties that can degrade the precision of this approach – yielding possible measures for mitigations.

- Request Headers

- From the request size, we could determine the URL length as additional input, given that we are able to determine the overall header lengths. Note that most variable-size headers can be guessed or constrained using the assumed SNI (Host), user-agent (this can even be fingerprinted using observed TLS behaviour only), locale, cookie presence, and referer.

- Response Headers

- Estimating the response header overhead is more critical. While most of them are static, variable-sized headers as for example caching headers, cookies to be set, or the content-type should be constrained as good as possible.

- Compression

- While TLS-layer compression seems uncommon, HTTP content-encoding compression for text via gzip is ubiquitous. While this compression should be stable in a sense that the output lengths are roughly predictable for each server-side setting, this narrows down the interval of possible lengths and thus negatively affects our precision in case of length collisions.

- Caching

- Given that for cache-hits we don’t have any payload and

If-*headers are (usually) of fixed size, the presence of caching unfortunately leaves us with the information derivable from (mostly) the request headers, as mentioned before. However, it might be safe to assume that only the first visit will be interesting, and if captured, upcoming cache-hits can be better identified by comparing the header sizes plus revalidation headers. - Padding

- If the used cipher is a block cipher in a mode that uses padding, one would have to use fuzzy matching based on the average (half) block size.

- HTTP/2.0

- Header compression and pipelining/multiplexing will most probably render this approach nearly useless.

- Keep-Alive/Connection Pooling

- Browsers that issue multiple requests in parallel or over the same connection should not be an issue as the request/response pairs can still be identified using ordering and timing information.

- Cipher Support

- Only few cipher suites (w/o compression) are supported at the moment.

- Evaluation

- There is no automated framework yet for training a neural network (or only maintaining a simple database) for estimating the header overhead or building a request flow graph.

- Resilience

- The lower-layer TCP/IPv4 parsing is very naive and currently cannot handle (but can detect) fragmented, retransmitted, or out-of-order packets.