Music library clustering

Clustering a music library into playlists while only using extracted audio features. Information retrieval methods such as PCA, Autoencoder, and k-Means are evaluated.

Dynamic playlists, “radio modes”, or music recommendation systems often rely on listening habits or human annotation (artists, genres). Information retrieval on a huge listener database might not be possible and tag labels might be misleading, though. For example, the latter falls short when it comes to unusually “soft/hard” pieces of music from otherwise different artists/genres that are still perceived similar.

This is an attempt to explore whether pure music information retrieval yields audio properties that correlate to a similar “mood”, while explicitly excluding metadata from the whole process.

Samples

For demonstration purposes, as dataset (that does not push browser bandwidth and SVG rendering limits) we pick 50 random songs from 20 arbitrary genres.

In the following, the genre information (as obtained by mutagen) is never encoded as feature dimension and only used for clustering evaluation and plotting.

Note that the genre list is not manually sorted – nearby colors are not necessarily “related”. Seems this is not an easy problem to solve, see the section on genre labels below for details.

Feature Extraction

Various audio features are extracted using librosa. In order to avoid encoding timeseries, each file is assigned single values, namely the overall average, median, and standard deviation – after clamping 1% vast outliers. For example, the spectral centroid, sorted by median and colored by genre:

In especially when re-sorting by genre, there seems indeed some pattern emerging that could help telling them apart:

- Centroid

- The mean frequency of the magnitude spectrogram. As there is little sense in averaging over per-frame frequencies, the results are converted to a mel scale, which behaves more linearly as it represents the “perceived” frequency rather than a logarithmic measurement.

- Bandwidth

- Spectral bandwidth as a measure of how narrow or wide the signal is around the centroid, or how much this varies over time.

- Flatness

- Spectral flatness as additional measure of noisiness.

- Zero Crossings

- How often the signal crosses zero, i.e., flips signs.

- Roll-On/-Off

- Where the lowest 10% and top 10% energy is contained.

- Spectrum

- The absolute magnitude for six bark band bins (100, 510, 1080, 2000, 3700, and 15500 Hz).

- Tempogram

- The overall detected BPM value seems to be not telling much. Instead, something like the “flatness” on the self-recurrence beat detection is used, which indicates a more varying or constant tempo – as simpler replacement for high frequencies in the linear tempogram itself.

- HPSS

- RMS difference of the decomposition into harmonic and percussive components.

As feature vector, the 16 most promising values are picked. After independent normalization, the full matrix of all features for all input songs can be visualized as:

Note that some values are negated: Flipping a dimension and thereby simply moving to another quadrant should not be important for encoding or clustering. However, this avoids negative PCA factors lateron, which can be interpreted as “contradicting axes”.

Encoding

Treating each feature as independent dimension is very likely to skew results due to redundancy, correlation, or differing variance. Also, the contained information (or entropy) in the dataset is most likely less than the full 16D input. Such dependencies inside the dataset can be accounted for by extracting and encoding the most significant information bits instead.

Principal Component Analysis

The well-defined PCA serves as baseline for the dimensionality reduction from 16D into 1D, 2D, 3D, and 8D. Intuitively, this tries to minimize/maximize the variance across the dataset by coordinate system transformation and sorting.

Here, further scaling seems to be recommended. The input features thus additionally pass StandardScaler adjustments, which centers at zero and divides by variance.

Sorting by the resulting major component already shows some grouping by genre. This one-dimensional ordering could even serve as a crude linear playlist.

Two dimensions reveal even more interesting clusters (same data, flipped axes for different aspects and histograms):

Plotting the first three dimensions yields, also just from different angles:

While these three components together cover 65% of the original variance, the (not shown) 8D representation usually accounts for over 90%.

Autoencoder

As alternative to PCA, the normalized feature vectors are additionally fed into an autoencoder for comparison. Intuitively, this can reduce dimensions for non-linear dependencies more freely, at the cost of less stable and barely analytically interpretable results.

Shown is one of the most straight-forward 16-to-3 dimensional neural network, consisting of sigmoid-activated dense layers. The goal is to reconstruct inputs while passing a three-node bottleneck.

Using only a single encoding node for a linear ordering yields:

Again, two dimensions already show even more promising patterns:

Given the oblivious plotting by genre, the training for three dimensions produces:

Overall, the results look equally acceptable to PCA at first glance. This is not overly surprising, though, given the autoencoder implicitly optimizes for a similar goal.

Clustering

Even without clustering, the original features or any reduced dimension embedding can already serve as basis for recommendation systems, e.g., using local neighborhood search by simple euclidean distance. For grouping into different meta-genres, moods, or playlists, discrete cluster identifiers need to be assigned for each measurement.

Note that while previous plots seemingly already produced good clustering, the “external” coloring might have been misleading: The nearby values barely form the typical convex shapes of separation and varying density.

k-Means

For example, splitting the three-dimensional PCA space into 16 clusters using the well-established k-Means clustering method gives:

Note that these are the same plots from the encoding step, but colored by assigned cluster identifier and not genre.

Agglomerative

Alternatively, Agglomerative as hierarchical method on the same data (targeting 16 clusters of the three-dimensional PCA) produces:

One advantage of hierarchical approaches is that irregular non-euclidean spaces are supported, as only pairwise distances are needed – for example by introducing a per-genre bias. Such a more “supervised” approach is introduced the section on genre labels below.

Evaluation Metrics

For clustering performance evaluation, several readily available metrics are depicted, namely: Adjusted Rand Index, Adjusted Mutual Information, Homogeneity, Completeness, and the Fowlkes-Mallows Index. However, exact reconstruction of the genre labeling was not the goal, but instead bringing similar songs with different genres together. A large overlap should be a good sign, still.

Evaluated are the combinations of the two different clustering methods on each of the six encoded spaces: 3D PCA and autoencoder, 8D PCA and autoencoder, full 16D normalized autoencoder input features, and full 16D PCA input features scaled by deviation. The results from splitting into 2–20 different clusters are taken into account.

By eyeballing, seems that k-Means on the eight-dimensional autoencoded values performs best. The corresponding playlists (clusters) can be mapped to genre tags as follows:

- The generated playlists as end result seem not perfectly convincing, but could become viable after a few manual tweaks.

- The intermediate one-dimensional encodings can already serve as a crude linear playlist for themselves.

- The intermediate raw or embedded data as distance/similarity matrix could be used for recommendation systems, such as dynamic playlists for MPD.

- A more supervised approach could be expanded on, making use of available categorical information such as genres, artists, or folders – see the next section.

Genre Labels

All the above did not use genre information but for colorization, which requires a somehow “semantical” sorting. Also, as such tags are usually available, this initial requirement can be weakened to allow genres to influence the actual encoding.

Categorical Sorting

The most simple way to produce plots for validation could be to assign random (hash-based) or sorted/discrete (linear colormap) genre colors. Alternatively, a partial ordering or value of similarity/difference can be enough to determine an overall sorting that obeys distance. For example:

- At least some string-based metric such as Levenshtein or from difflib would give a crude similarity matrix between genre names.

- An additional label-trained autoencoder could provide corresponding embeddings.

- Existing PCA or encoder results could be re-used and fed back. For example, the one-dimensional genre-less embeddings might serve as basis for a single but non-linear encoding.

More generally, averaging the per-genre 3D PCA values yields a three-dimensional embedding as follows:

Finding the optimal ordering that minimizes the sum of individual (3D-euclidean) distances can then be reduced to the Travelling Salesman Problem, which is (un-)surprisingly vastly out of scope: Realistic 100 different genres would already result in 100! ≈ 2524 permutations to try. Thus, the local search solver from python-tsp is used as heuristic instead.

Encoding Genres

Autoencoders are not particularly well-suited for categorical inputs, usually resorting to ordinal or one-hot encoding. For the latter, N genres would result in N additional input features as N-sized binary vector. But as there is some kind of “relationship” involved, there should even be more extractable information available than just (not) having the same label.

The transformed PCA output however also resembles a readily usable embedding – as three-dimensional ordinal feature set to be used alongside the actual audio features.

Hierarchical Clustering

Such genre embeddings could also be used in the context of additional clustering dimensions, but the corresponding information should already be represented by the encoded coordinates.

Alternatively, the agglomerative clustering approach comes with the useful property that it only bases on a pairwise distance matrix, which can be precomputed arbitrarily. This allows to use an irregular non-euclidean space beyond the encoded dimensions, such as when “contracting” distances by a certain factor upon genre match.

As result of this more “supervised” approach, when enabling genre-bias mode, both the encoding and clustering output look even more pronounced:

Installation & Usage

python3 -m venv venv

./venv/bin/pip install --require-virtualenv -U -r requirements.txt

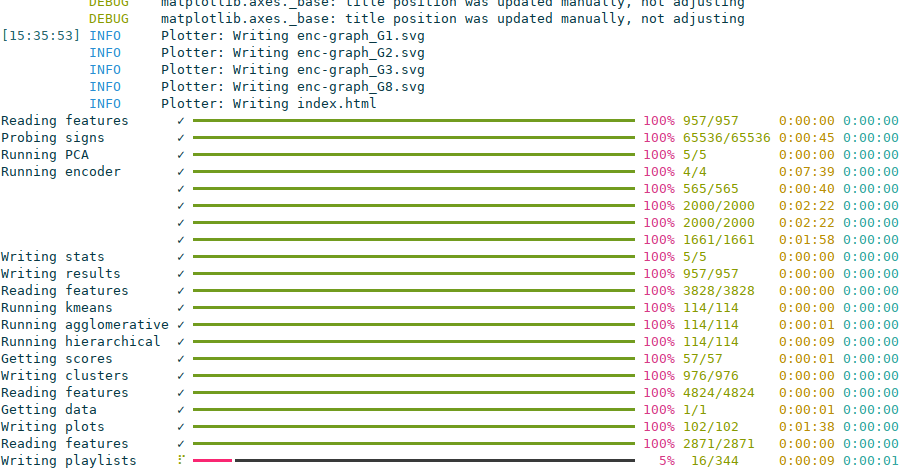

./venv/bin/python3 -m mir_cluster_playlist --extract-in-playlist my-library.m3u --out-dir out/

usage: __main__.py [-h] --extract-in-playlist M3U --out-dir DIR [--debug] [--concurrency NUM]

[--extract-cache 0|1] [--extract-limit NUM] [--epoch-limit NUM] [--genre-bias NUM]

Extract audio features and metadata, encode using PCA or Autoencoder, run clustering, and plot results.

options:

-h, --help show this help message and exit

--extract-in-playlist M3U main input of files to process (default: None)

--out-dir DIR output directory for various files (default: None)

--debug enable debug logging, extra plotting, and additional checks (default: False)

--concurrency NUM number of threads for audio processing (default: 1)

--extract-cache 0|1 force re-usage of audio feature extraction (default: auto)

--extract-limit NUM process at most this number of input audio files (default: None)

--epoch-limit NUM maximum number of autoencoder epochs, might stop earlier (default: 2000)

--genre-bias NUM use genre embeddings for encoding and guided hierarchical clustering (default: 0.0)